Engineering a Synthetic Image Rendering Framework for Spacecraft Vision-Based Navigation

11.04.2025A surge for demand of autonomous operations for close-proximity space missions requires a relative navigation solution utilizing visual sensors. In this project, you work with Unreal Engine to realise a synthetic image rendering framework to train AIs for such missions. This rather comprehensive project provides dedicated scopes for Game Research Labs, Master's and Bachelor's theses.

Problem

The ever-increasing space debris population, demand for sustainable spaceflight operations, reinvigorated interest for deep space exploration and construction of space stations has led to a surge for demand of autonomous operations for close-proximity missions such as Active Debris Removal (ADR) and On-Orbit Servicing, Assembly, and Manufacturing (OSAM). The common denominator between these missions is non-conventional close-proximity interactions with non-cooperative objects. These do not possess a functioning attitude determination and control system nor a communication system. An active satellite engaged in close-proximity of non-cooperative objects requires a relative navigation solution utilizing visual sensors, such as monocular cameras or LIDAR.

These kinds of tasks have become a prime candidate for AI in terrestrial applications, most prominently autonomous driving. A major challenge however is the generation of training data. Unlike in terrestrial applications, the generation of image data for AI training is much too complicated, risky and expensive for spaceflight to be realized. It is therefore necessary to rely on synthetic images or training AI on computer vision (CV) tasks for space applications. Several synthetic image datasets have been generated over the years, some even supplemented with real images. These, however, have several drawbacks:

- Limited realism: On-orbit lighting conditions are typically only partially simulated and only with varying degrees of realism. Certain light effects, such as material reflection or Earth albedo, are often simplified or not replicated at all.

- CAD models for target objects: Highly detailed engineering models of the observed objects are typically used without optimization for rendering. While these CAD models can be highly detailed, surface textures and materials are typically rather simplified and can result in a “stereotypical” video-game look, objects such as the Earth appears photorealistic as it stitched together from real images.

- Physical mismatch between the scene assets: Oftentimes the background assets are pasted into the scene from real images, but not with consideration if the lighting of said object matches the overall scene.

- Simplified camera emulation: Little effort is spent to faithfully replicate the specific characteristics of an optical sensor, such as the camera intrinsics, noise and artifacts, etc…

- Closed-source: Generation of additional images or modifications is not possible as access to the software used for image generation is not provided.

The state-of-the-art datasets are typically relegated to benchmarking and pose estimation challenges or case studies.

Objectives

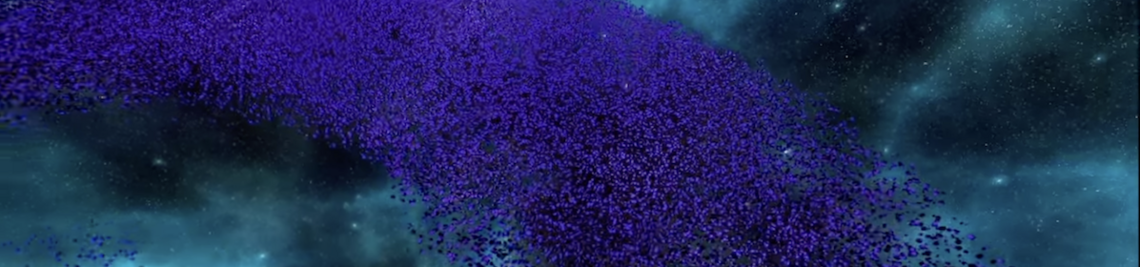

We want to develop a synthetic image generation framework that is highly modular and beyond the current state-of-the-art. An example of our envisioned realism is shown in the image above which has been created in cooperation with a visual effects specialist. The goal for the framework is not to be just another dataset but the whole generation pipeline with high modularity allowing interchangeability of the camera, target object, background and lighting, eventually allowing the generation of synthetic images for any orbit, any object, and any vision-based sensor. The high-degree of realism shall enable to confidently train AI on CV applications for space using purely synthetic images, allowing for real deployment and not just pose estimation challenges and benchmarking.

Tasks

- create an orbit environment in accordance with publicly available image data sets

- ensure the proper lighting parameterisation considering altitude and location relative to the Earth, the Sun and the stars

- adapt the rendering view to align with specific monocular cameras

- model potential target assets, considering common spacecraft materials and designs (high detail models can be reduced)

- identify suitable publicly available image data to conduct systematic rendering comparisons

Contacts

Prof. Dr. Sebastian von Mammen

Prof. Dr.-Ing. Mohamed Khalil Ben-Larbi

khalil.ben-larbi@uni-wuerzburg.de

Markus Huwald, M.Sc.

markus.huwald@uni-wuerzburg.de